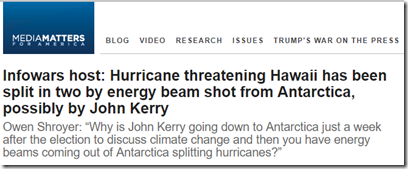

Why is John Kerry going down to Antarctica just a week after the election to discuss climate change and then you have energy beams coming out of Antarctica splitting hurricanes?

-- Owen Shroyer, Infowars

We are under attack.

America is under attack.

From weather weapons and energy beams wielded by John Kerry, if you believe Alex Jones.

It’s true. Quite literally. But not in the manner described by hysterical conspiracy theorists. And nothing so simple or as silly or as easily countered.

America is under attack. It’s true. We are under attack in a war that very few Americans, most especially including those in charge, or those pushing conspiracies, understand. Worse, it’s not just that we don’t understand this war, it’s that so very many Americans are even capable of understanding this conflict.

And thus America is ill-equipped to fight off this assault.

A study published today in Research and Practice, describes how Russian operators have weaponized health communication.

Specifically the authors, David A. Broniatowski, PhD; Amelia M. Jamison, MAA, MPH; SiHua Qi, SM; Lulwah AlKulaib, SM; Tao Chen, PhD; Adrian Benton, MS; Sandra C. Quinn, PhD; and Mark Dredze, PhD, show how information warfare pushed by social media automation (i.e. "bots) and directed by troll accounts, can use wedge issues like the conspiracy theories surrounding vaccinations to sow measurable chaos and division in the American population.

I have some significant experience in this field.

This is what I used to do for a living.

In the beginning I was a technical cryptologist. A codebreaker specializing in electronic signals. Back in those ancient days of the early 1980s, the world was a very different place. As now, America faced myriad threats, but most of those took a backseat to the Soviet Union.

Over the years, the politics and the enemies changed, the tools evolved, but for most of the 20th Century intelligence work remained pretty much the same.

All that changed in the last 20 years.

And the most profound change in intelligence work is volume. Volume of information.

You see, back when I first joined the Intelligence world, bandwidth was a scarce commodity. Communication channels from out on the pointy end of the stick where I was back to certain three-letter agencies in Washington – and then on to the decision-makers and perhaps the public – was limited.

Extremely limited.

Limited in a fashion most of you who are reading this from your smartphones are unlikely to understand.

Let me give you an example: We often reported via encrypted satellite text-based messaging systems that operated at 75 baud.

No, that’s not a typo. 75 baud.

What’s a baud?

Exactly.

Unless you’ve worked with old electronic communications systems, you’re likely unfamiliar with that term, baud. It’s a measurement of information rate, a unit of transmission speed that describes signal state changes per second in an analog shift-key teletype system.

Huh?

Right. Huh indeed.

Let me put it in modern terms: a baud is equivalent to one bit per second.

You, of course, looking at your 4G smartphone, you are much more familiar with megabits or even gigabits per second. Millions, billions, of bits of information per second. Every second.

But back in day, information moved at a much slower rate.

Depending on the character-set/encoding system used, it takes anywhere from 5 bits (Baudot code) to 21 bits (Unicode) to make one character, i.e. a letter or number or other special character such as a period or question mark. The very symbols you are reading right now. Back then, our systems generally used 8-bit character sets (ASCII). Meaning that it took eight state changes, eight bauds, to send one character. Now, if you’re running at 75 baud, each bit (the smallest unit of information in the system) is then 13.3 milliseconds in length, or about 13 one-thousandths of a second to transmit. Multiply that times eight and you find that it takes a little over a tenth of a second to transmit one character – and in practicality, longer, because we were pushing those bits through high-level encryption systems, and through multiple levels of bi-directional transmission error-checking.

Now, what all that technical gibberish means in practical terms is that sending data was slow.

Very slow.

In the amount it took to send one character back then, you could have reloaded this webpage on your smartphone half a dozen times over. Or more.

In the last decade of the 20th Century, and the first two decades of this one, communication speed and the amount of data that we can send reliably – even from a satellite cell phone in the warzone, far out on the pointy end of the stick – has increased by several orders of magnitude, i.e. hundreds of thousands of times. In some cases, millions.

Volume.

Volume of information.

And that’s not necessarily a good thing. Not when it comes to quality and reliability of information.

Not when it comes to fact and truth.

You see, back in the day, every bit was precious. So, when we gathered information, sometimes at great risk to ourselves, that raw intelligence was examined on site by analysts, specialists in that particular target. If it was deemed worthy of further examination, then it was formatted into electronic reports. And those reports had a very specific structure, they were very lean, using only the characters necessary to relay the information and no more. Before the reports were transmitted, they were examined by a very experienced senior NCO – who was typically also an analyst. Then, in many cases, the information was checked one final time by an officer. The report was sent up-echelon to a regional processing center, where it was again examined by a team of analysts and combined with other information (a process that was in those days known as “fusion”), and then that report was examined at multiple levels and forwarded up the chain of command to one of those aforementioned three-letter agencies back in Washington, where it was combined with yet more information and turned into national intelligence assessments for those in the White House and Congress.

We had plenty of people, what we didn’t have was bandwidth and computing power.

So, it was imperative that every bit sent was as accurate and as reliable as possible, so as to make the absolute best use of our resources.

And the side effect of this painstaking process was that the final intelligence product was of very high quality and presented to the president by those were were very, very familiar with the targets and who specialized in explaining this information to politicians in a fashion they could understand.

Now, don’t get me wrong here, how that information was interpreted and used by politicians at the top end of the chain of command is a different matter – and likewise when that information was declassified (in some cases) and pushed out to the general public. And it wasn’t just the intelligence community, news organizations labored under those same technological restrictions and the same biased-interpretation by the politicians and the public. Which had similar impact on the information they presented and how it entered the public consciousness.

That said, the information that arrived at the consumer was often as accurate and as reliable as is humanly possible.

All of that changed with the advent of dramatically increased bandwidth and processing power.

Over the last few decades the information cycle has become highly compressed, increasingly so.

And as the volume of raw information increases exponentially, at the same time the ability of both the news media and the intelligence community to analyze it and filter out the noise has dramatically decreased.

It has become utterly impossible to examine each piece of information in the detail we once did.

And as such, it is utterly impossible to ensure the quality and reliable and accuracy of that information.

Now, here’s the important part, so pay attention: Our society, both the decision-makers who run it and the citizens who daily live in it, is habituated to having information processed, analyzed, and presented in a fashion where they can have reasonable confidence in that information.

And that information thus directly shapes our worldview.

Up until recently, the average politician, the average citizen, didn’t have to be an information analyst, didn’t have to have critical information processing skills, because the information system for the most part did that on the front end. The consumer very rarely received raw information about the world outside of their own immediate sphere of observation.

Almost everything you knew about the greater world was filtered through information processing systems by experts.

That is no longer true in any fashion.

And yet, we operate as if it still is.

You can see this most clearly in the older generation, many of which still believe that “they couldn’t print it if it wasn’t true.”

This is bad enough in the general population, but it is a disaster of unmeasurable scale when government, and society itself, begins to operate on this unstable foundation.

The massive increase in information volume means that all of us are daily bombarded with a firehose of raw information, unprocessed, unfiltered.

And the vast, vast majority of you are ill-equipped to handle this in any fashion.

Most of the world lacks the training, the tools, and the support to filter bad information from good, to determine the validity of intelligence. And so, increasingly, we live in a world of malleable reality, one where politicians and media personalities tell you with a straight face “truths are not truths” and “there are facts and alternative facts.”

This problem became millions of times worse with the advent of social media.

And this situation, this world of alternative facts and shifting truth and bottomless raw unfiltered information piped directly into the minds of the population without error-checking and expert interpretation creates new and unique vulnerabilities that can be exploited on a massive, global, scale in a fashion that has never been done before.

Information warfare.

More powerful, more far-reaching, more scalable, more destructive to the very fabric of our society than any nuclear bomb.

This form of warfare is incredibly powerful, far more so than any other weapon – because it reaches directly into your mind and shapes how you see the world.

Information warfare is infinitely scalable, it can target a single individual, or the entire global population, it can target a single decision-maker, a government, a population, or alter the course of history.

For example: The president of this country watches a certain news/talk/infotainment show. Every day. Without fail. And that show, the information presented there, directly shapes how he sees the world. You can watch this happen daily in real-time. Those who control that show, has direct and immediate influence on the president, and thus on the country, and thus on a global scale. It is a astounding national security vulnerability. One our enemies are well, well aware of and one, a vulnerability that our own counter-intelligence people cannot plug due to the very nature of their own Commander-in-Chief.

This is unprecedented in our history.

Over time, Information Warfare has had many names and been implemented in many, many ways – sometimes hilariously unsuccessful, sometimes horrifyingly effective, often somewhere in between. Deception warfare, communications warfare, electronic warfare, psychological warfare, perception management, information operations, active measures, marketing, whatever you call it, this form of weaponized intelligence really came into its own with the advent of social media and the 24/7 news cycle.

And unlike convention weapons, information warfare can be wielded by a handful of operators, working from a modest office with no more infrastructure than a smartphone and a social media account.

Weaponized information.

Active measures.

This was my specialty. Over time, as the intelligence community changed, as technology evolved, my own career changed with it, I went from being a junior technician specializing in electronic signals to an information warfare officer, one of the first in my field to be specifically designated as such. And one of the first to go to war specifically as such. Now I'm not going to discuss the details of my own military career any further, because those specifics are still highly classified. Suffice it to say, this is a field with which I am intimately familiar. And one at which I was very, very good.

And from that experience, I will tell you this:

An educated population trained from early age in critical thinking, whose worldview is based on fact, validated evidence, and science, is the single strongest defense – the only true defense -- against this form of assault.

But, we don’t live in that world.

We can’t put the genie back in the bottle. And we lack that defense, deliberately so. Because just as our own enemies benefit from an population incapable of critical thought, so do those who seek political power within our own nation.

A population skilled in critical thought is the best defense against information warfare waged by our enemies, but it is also the best defense against tyranny, against the corruption of political and religious power.

But, again, we don’t live in that world.

And as such, given the state of America, the anti-vaxxer conspiracy theory was an easy target.

It's not the only one, or the easiest one, or the wedge issue most vulnerable to manipulation, or the one most likely to be pushed from a low-grade irritant into a full-on pitched battle among the population and thus one that directly influences the decision-makers in charge of our government.

It is simply an easy target. One of many. Low hanging fruit. An obvious point of exploitation.

It’s not the conspiracy theory itself that is the point of vulnerability, it’s the conditions, the worldview, that lead to such persistently wrong-headed beliefs.

You see, it is religious nuts, the fanatical partisan, conspiracy theorists, the uneducated, the deliberately ignorant and the purposely contrarily obtuse who are the perfect targets for Information Warfare.

These are the perfect suckers, easily manipulated and turned into unwitting tools of the enemy.

All you have to do is tell them what they want to hear.

And in America’s case, this target is uniquely vulnerable, uniquely fertile, because they have been conditioned by centuries of first religious nonsense given equal footing with science and then decades of conspiracy theory “infotainment” media treated as fact for profit.

If you want to know how we got here, all you have to do is look for the “Infowars” bumper-sticker proudly and unashamedly displayed on the car ahead of you in traffic.

It’s not just Alex Jones.

Or Rush Limbaugh or Glenn Beck or Michael Savage and all the others who sell conspiracy theory as fact.

It’s not just Jerry Falwell and Ken Hamm and Joel Osteen and all the other holy joes who push their religious fraud as truth.

It’s not just the politicians who lie to you every day for their own profit and power.

It’s a population that utterly lacks the ability to process information in any reliable fashion, lacks intellectual rigor, lacks intellectual curiosity, and worse, lacks any desire to acquire such.

This is the population described by Orwell in his novel 1984.

Truth is not truth.

Understand something here, those on the other side, the operators working for Russia, they don't really care one way or the other if you vaccinate your kids.

Not really

Although an unvaccinated population is vulnerable to other kinds of warfare as well, and if campaigns like this one increase that vulnerability, well, then Russia gets more bang for its ruble and its biological weapons become just that more effective. In the military, we call this a force multiplier.

The goal is here division, to sow discord in the target population, start a fight in the target country and keep that fight going, break down unity, create distrust at all levels of the target society.

This particular point of attack is one of hundreds.

If you watch social media for this sort of thing, you very quickly see dozens of other points of vulnerability in the population. And if you go looking, and you know what to look for, you very quickly find evidence of similar manipulation on those fronts.

Information Warfare can, and is, used as a primary warfare area, as powerful or more so than any bomb. I've done it myself in combat. But when used in this manner, as the Russians are using it against us right now, it is a warfare support function. An enhancer. A force multiplier, one that makes other weapons, both kinetic and political, work better.

You don't need to hack election machines, if you can hack the voter.

You don't need to hack democracy if you can hack the citizen.

You don't need to physically destroy the United States if you can make Americans distrust the fundamental institutions of their own republic.

If you show that the election machines are vulnerable before the election and you do so in a manner that is purposely detectable – that you know will be detected and thus reported hysterically to the population by the target’s own media -- then you don't have to hack the actual elections themselves.

You only have to show that you can.

Couple that to amplification of voter disenfranchisement, enhanced by the aforementioned division, and you directly influence the population into believing that democracy cannot be trusted. That the fundamental fabric of the Republic is unsound. And the voters will stay home, or vote in a manner that creates division, or will not trust any results of the election and thus be prone to riot and protest and resistance against the resulting government.

You don’t have to destroy America, when Americans are willing to do it for you.

Now, the most effective countermeasure in this particular example should be obvious.

Secure the election.

By whatever means necessary secure the election, paper ballots, secure isolated non-networked machines, validated public audits, whatever methodology of validation and integrity is most provable to the population.

Instead of closing voting stations, open more. Get citizens to polls. Make it easier for the population to vote, not harder.

End by law those institutions which disenfranchise voters, i.e. gerrymandering, certain types of primaries, and so on.

In other words, do what is necessary to demonstrate to all citizens that the fundamental institution of the Republic is sound and that democracy can be trusted and that their vote counts.

Or course, the only way to do that is to actually make democracy trustworthy and make every vote count.

Instead, ironically, those in charge have done exactly the opposite.

Why?

Because this is the natural tendency of those in power.

And that tendency, that weakness of our republic, is precisely the vulnerability this line of attack is designed to exploit.

Russian information warfare didn't create this vulnerability, it simply takes advantage of it so long as we do nothing to counter it.

This particular attack, the one outlined in the study linked to above, is insignificant when looked at in isolation. It is simply a target of opportunity. One of many. But when looked at as part of the larger whole, it is an assault in a much larger and far more significant war. One that we are losing.

Russian doesn't have to destroy America with bombs and missiles.

All they have to do is make us weaker and weaker while they grow stronger.

All they have to do is exploit the vulnerabilities we give them.

All they have to do is take advantage of the deliberately ignorant and the gleefully stupid.

History will do the rest.

And there is no greater student of history than a Russian like Vladimir Putin.

Information is not knowledge.

-- Albert Einstein

Before you old grognards rush to the comments to correct my opening line, technically the original books lacked any way to decide who goes first. For that rule, co-creators Gary Gygax and Dave Arneson supposed gamers would refer to Gary’s earlier Chainmail miniatures rules. In practice, players rarely saw those old rules. The way to play D&D spread gamer-to-gamer from Dave and Gary’s local groups and from the conventions they attended. D&D campaigns originally ran by word-of-mouth and house rules.

Before you old grognards rush to the comments to correct my opening line, technically the original books lacked any way to decide who goes first. For that rule, co-creators Gary Gygax and Dave Arneson supposed gamers would refer to Gary’s earlier Chainmail miniatures rules. In practice, players rarely saw those old rules. The way to play D&D spread gamer-to-gamer from Dave and Gary’s local groups and from the conventions they attended. D&D campaigns originally ran by word-of-mouth and house rules. Chainmail lets players enact battles with toy soldiers typically representing 20 fighters. The rules suggest playing on a tabletop covered in sand sculpted into hills and valleys. In Chainmail each turn represents about a minute, long enough for infantry to charge through a volley of arrows and cut down a group of archers. A clash of arms might start and resolve in the same turn. At that scale, who strikes first typically amounts to who strikes from farthest away, so archers attack, then soldiers with polearms, and finally sword swingers. Beyond that, a high roll on a die settled who moved first.

Chainmail lets players enact battles with toy soldiers typically representing 20 fighters. The rules suggest playing on a tabletop covered in sand sculpted into hills and valleys. In Chainmail each turn represents about a minute, long enough for infantry to charge through a volley of arrows and cut down a group of archers. A clash of arms might start and resolve in the same turn. At that scale, who strikes first typically amounts to who strikes from farthest away, so archers attack, then soldiers with polearms, and finally sword swingers. Beyond that, a high roll on a die settled who moved first.